What is the Best Way to Think of Prompt Engineering

A guide to understanding and mastering prompt engineering, exploring effective mental models and best practices for crafting AI prompts.

The Essence of Prompt Engineering

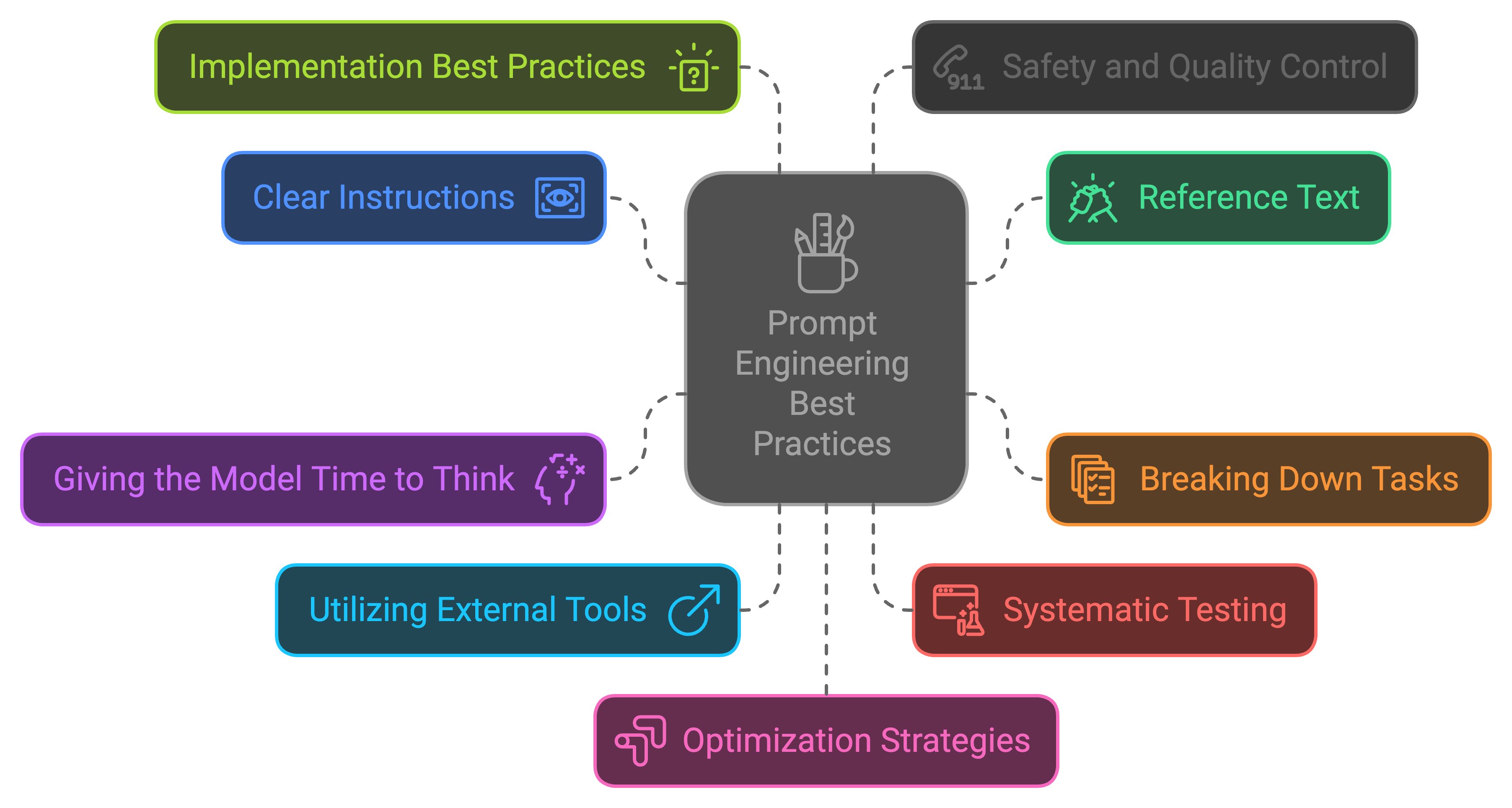

Prompt engineering is a hot topic in the world of AI, with countless articles available online. However, much of the advice boils down to common sense writing principles:

- Be clear and specific about what you want to achieve

- Avoid contradictions

- Focus on one thing at a time

Drawing Parallels with Programming

In many ways, prompt engineering is similar to programming. Just as a function in programming should ideally perform a single, well-defined task, the best and most stable prompts should aim to accomplish one thing, not ten different things. This approach minimizes the chances of confusion or errors.

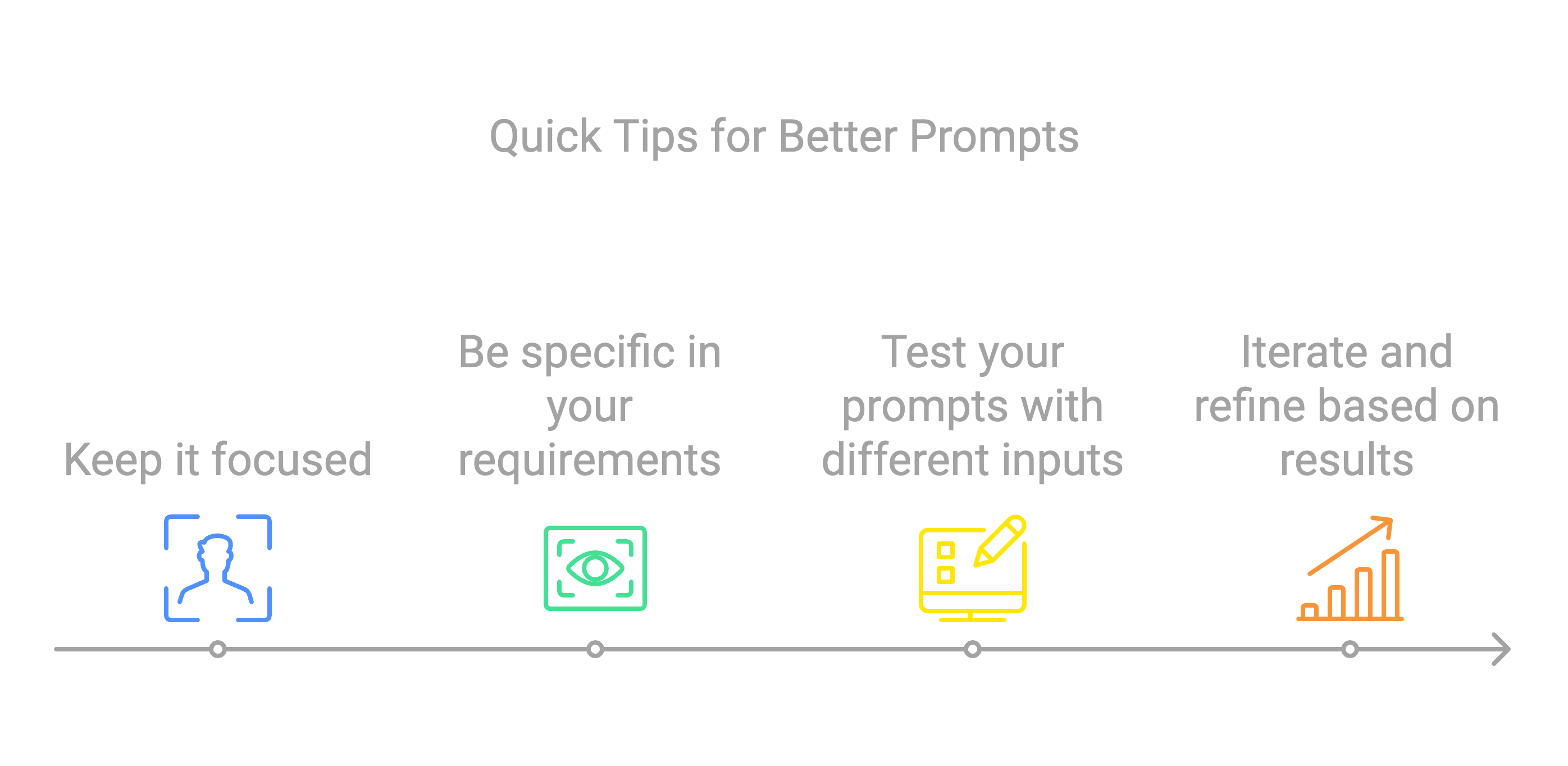

Quick Tips for Better Prompts:

- Keep it focused - one prompt, one task

- Be specific in your requirements

- Test your prompts with different inputs

- Iterate and refine based on results

Communicating with AI like Humans

When crafting prompts, it's helpful to think of the process as communicating with another human being. Consider this scenario:

If you were to give a colleague a task with ten different responsibilities and multiple ways to interpret it, there's a high chance they would make mistakes. The same applies to LLMs.

The key skill in prompt engineering is the ability to clearly and unambiguously convey what you want, without contradictions.

The Future of Prompt Engineering

While manually writing prompts is the current norm, the future lies in utilizing LLMs themselves to assist in the process. Here's what's coming:

- AI agents specialized in prompt engineering

- Interactive dialogue systems for prompt refinement

- Automated prompt optimization

- Real-time feedback and suggestions

At Langtail, we've created tools to support this future, including our Prompt Improver - an AI agent designed to help you write better prompts through interactive refinement and suggestions.

Prompt Engineering in Real-World Applications

For companies developing LLM-based features or applications, successful implementation requires:

-

Data Collection:

- User interaction datasets

- Real-world usage patterns

- Edge cases and exceptions

-

Testing & Iteration:

- Systematic prompt testing

- Performance monitoring

- Continuous refinement

-

Tooling & Infrastructure:

- Experimentation platforms

- Multiple LLM provider testing

- Performance analytics

The Langtail Approach

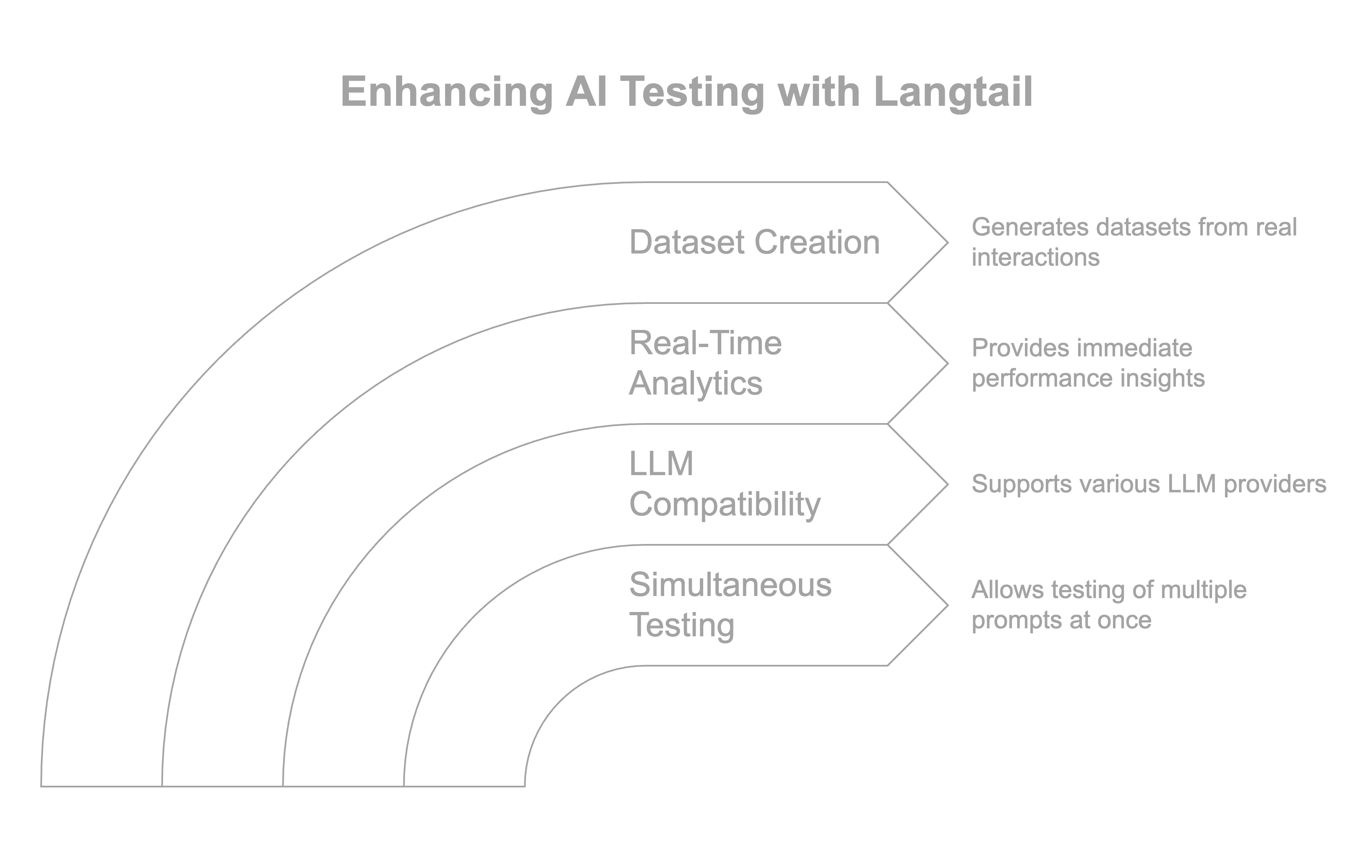

At Langtail, we focus on empowering non-developers – domain experts, marketing professionals, product owners, and QA teams. Our platform offers:

- Simultaneous testing of different prompts

- Multiple LLM provider compatibility

- Real-time performance analytics

- Dataset creation from actual interactions

Next Steps for Prompt Engineering Success

-

Start Small:

- Focus on single-purpose prompts

- Test thoroughly before expanding scope

- Document what works and what doesn't

-

Use Available Tools:

- Try our Prompt Improver

- Experiment with AI-assisted prompt refinement

- Build a library of successful prompts

-

Stay Updated:

- Follow prompt engineering developments

- Participate in the community. Join our Discord

- Share your learnings and experiences

Conclusion

While prompt engineering may seem daunting at first, approaching it with the right mindset and tools can make all the difference. By thinking of prompts as clear, focused communications and leveraging AI assistance to refine them, we can unlock the full potential of LLMs and build more reliable, effective AI applications.

Related Articles

What Can LLM APIs Be Used For? A Complete Guide with Examples

Discover all practical uses of LLM APIs, from content creation to automation. Learn how to implement LLM APIs in your projects with real examples and step-by-step guides.

Understanding LLM Chat Streaming: Building Real-Time AI Conversations

Learn how to implement streaming responses with Large Language Models for a more engaging and responsive chat experience.

How to Test AI LLM Prompts

Discover practical approaches to testing AI LLM prompts, including deterministic tests and LLM-as-judge methods. Real examples and proven strategies.