Public Beta of Langtail is Here

Introducing Langtail, a collaborative platform designed to streamline the development, testing, and deployment of generative AI models. Born from firsthand experience with the challenges of building AI applications, Langtail aims to simplify the process, save valuable time, and enhance team collaboration. Let's dive into the details of our public beta and see how Langtail can help you.

The Journey to Langtail

Langtail emerged from challenges while developing an AI assistant. We know first-hand how difficult it can be to build a chatbot that can handle real-world scenarios and how much time it takes to get it right. And we're not alone in this struggle, as many companies we talked to face similar challenges when building apps with LLMs and other generative AI models.

The lack of tools for collaborating on prompts, debugging, and evaluating capabilities made the process manual and time-consuming. There was urgent need for a more streamlined approach, and so, the idea for Langtail was born, aiming to become an all-in-one platform for generative AI models while also providing the necessary tooling for collaboration, testing, analytics, and more.

The current version of Langtail is just the beginning, and of course, it's not yet the fully-fledged platform we envision. However, even in its early stages, the public beta is useful and is already serving a select group of partners.

What Can You Achieve with Langtail's Public Beta?

Collaborative Workspace

Invite your team to collaborate in one space. Work together on prompts, create evaluation tests, and much more, enhancing your teamwork and efficiency.

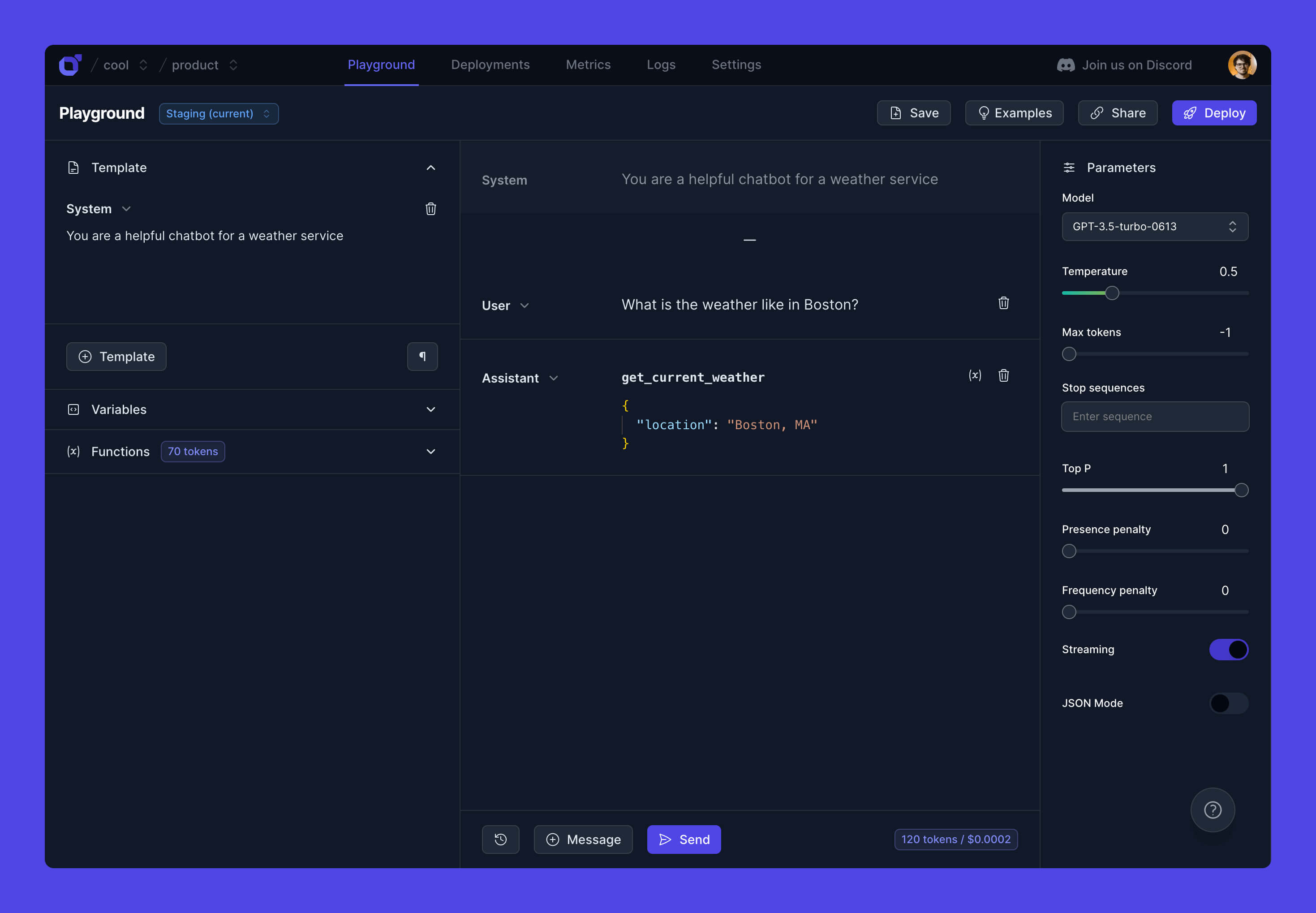

Playground for Rapid Prototyping

Our visual editor allows even non-developers to experiment with and refine model prompts. This feature democratizes the prototyping process, making it accessible and intuitive for all team members.

Versatile Prompt Deployment

After creating a prompt you're satisfied with, deployment is straightforward. Langtail supports various deployment stages, including preview, staging, and production, facilitating seamless integration into your development workflow.

Logging and Analytics

Access detailed logs and analytics for every prompt. This includes tracking inputs and outputs to the LLM, cost, and performance statistics, providing you with comprehensive insights for better decision-making.

Test Prompts

Utilize our integrated testing environment to launch a series of inputs against your prompt. This feature boosts your confidence in the model's behavior and expected outcomes.

Support for OpenAI and Azure OpenAI APIs

Langtail currently supports OpenAI and Azure OpenAI APIs, with plans to include more providers in the future. This ensures flexibility and adaptability in your AI development journey.

Success Stories from Early Adopters

Langtail simplifies the development and testing of Deepnote AI, enabling our team to focus on further integrating AI features into our product

— Ondřej Romancov, Software Engineer at Deepnote

As a prompt engineer, I appreciate Langtail's user-friendly interface and the freedom to test independently of the backend team. The team is super responsive and open to feedback. I highly recommend Langtail.

— Yiğit Konur, Head of AI at Wope

What's Next for Langtail?

We're proud of where Langtail stands today, but we're even more excited about where it's going. Here's a sneak peek of what's cooking in our development kitchen:

-

Support for More LLM Providers: We're continuously expanding our horizons to integrate more LLM providers into Langtail. Anthropic is next on our list, and we're actively working to bring it into our fold.

-

Live Data Evaluation Tests: We're developing a feature that allows you to run evaluation tests on live data. This real-time feedback mechanism will instantly alert you if your LLM is behaving differently than expected, enabling you to take immediate corrective action.

-

Per API Key Budget and Rate Limiting: To provide more control over your usage, we're introducing per API key budgeting and rate limiting. This will allow you to manage your resource consumption more effectively and prevent any unwelcome surprises.

However, our future plans aren't just about us or the features we think are cool. We're building Langtail for you, the developers who use it every day. So, we want to hear from you. What features would make your life easier? How can we better serve your needs? Your feedback, ideas, and suggestions are not just welcome—they're vital to making Langtail the best it can be.

Be Part of the Langtail Community

Join our vibrant community on Discord where you can connect with other Langtail users and contribute to the conversation. We believe in a shared journey in building world-class tools.

Pricing - Free, Startup-Friendly, and Enterprise-Ready

We understand that every developer, startup, and enterprise has unique needs and budgets. That's why we've designed a flexible pricing model:

-

Public Beta: During this phase, Langtail is completely free. We encourage you to explore and experiment without any financial barriers.

-

Free Tier: We're committed to maintaining a free tier for individual developers, students, and small teams, even after the beta phase.

-

Startups: We're aligning our pricing with your growth. Instead of charging per team member, we'll have a flat fee plus a cost per request, ensuring you only pay for what you use.

-

Enterprise: Security, compliance, and dedicated support are paramount for our enterprise customers. Our enterprise plan will include these features along with direct access to our support team.

We're still refining these plans and will share more details soon. Rest assured, our commitment is to provide a fair, transparent, and value-driven pricing model for all our users.

Join the Public Beta Now

Enough said. Sign up for our public beta, completely free of charge. Explore, experiment, and let us know what you think.

And don't forget to join our Discord community. It's the best place to share your thoughts, ask questions, and stay updated on all things Langtail. We're excited to have you on board!

Related Articles

What Can LLM APIs Be Used For? A Complete Guide with Examples

Discover all practical uses of LLM APIs, from content creation to automation. Learn how to implement LLM APIs in your projects with real examples and step-by-step guides.

Understanding LLM Chat Streaming: Building Real-Time AI Conversations

Learn how to implement streaming responses with Large Language Models for a more engaging and responsive chat experience.

What is the Best Way to Think of Prompt Engineering

A comprehensive guide to understanding and mastering prompt engineering, exploring effective mental models and best practices for crafting AI prompts.