Introducing Langtail 1.0 - The Best Way to Test Your AI Apps

We launched Langtail into public beta six months ago under this simple premise: Testing is what will make or break an AI app.

The AI space has evolved dramatically over the past 6 months - new frontier models, massive context windows, inexpensive models for certain use cases, and much more.

Want to know what hasn't changed? You still need to test your LLM prompts to make sure they behave the way you intend.

Want to know why that hasn't changed? At their core, LLMs are word predictors. They're inherently untrustworthy.

We are more convinced than ever that to build trustworthy AI apps, you need to test.

How not to test an AI app

For many companies that we've talked to, their story with LLM testing goes something like this:

- You build a cool demo app and impress your CEO or investors. You feel like LLMs can do anything.

- You get green-lit to turn the demo into a product. You have a text file with 10 example inputs and the LLM works well for all of them.

- You @channel your team and ask them to find bugs and break the app. They find a ton of issues.

- You put all of the issues in a spreadsheet and get to work fixing the issues.

- Repeat steps 3 and 4 a couple of times.

- There are so many rows in the spreadsheet that you hire a QA intern that spends their entire day testing different versions of the app and deciding whether the LLM's output is "good", "bad", or "meh".

- Despair.

That's usually when the head of engineering starts frantically searching, finds Langtail, and calls us.

So, how do you test an AI app?

We've been working really hard to answer that question for the past 18 months. We think we have a compelling answer.

Today, we're excited to introduce Langtail 1.0 – a major step forward for building and testing AI apps.

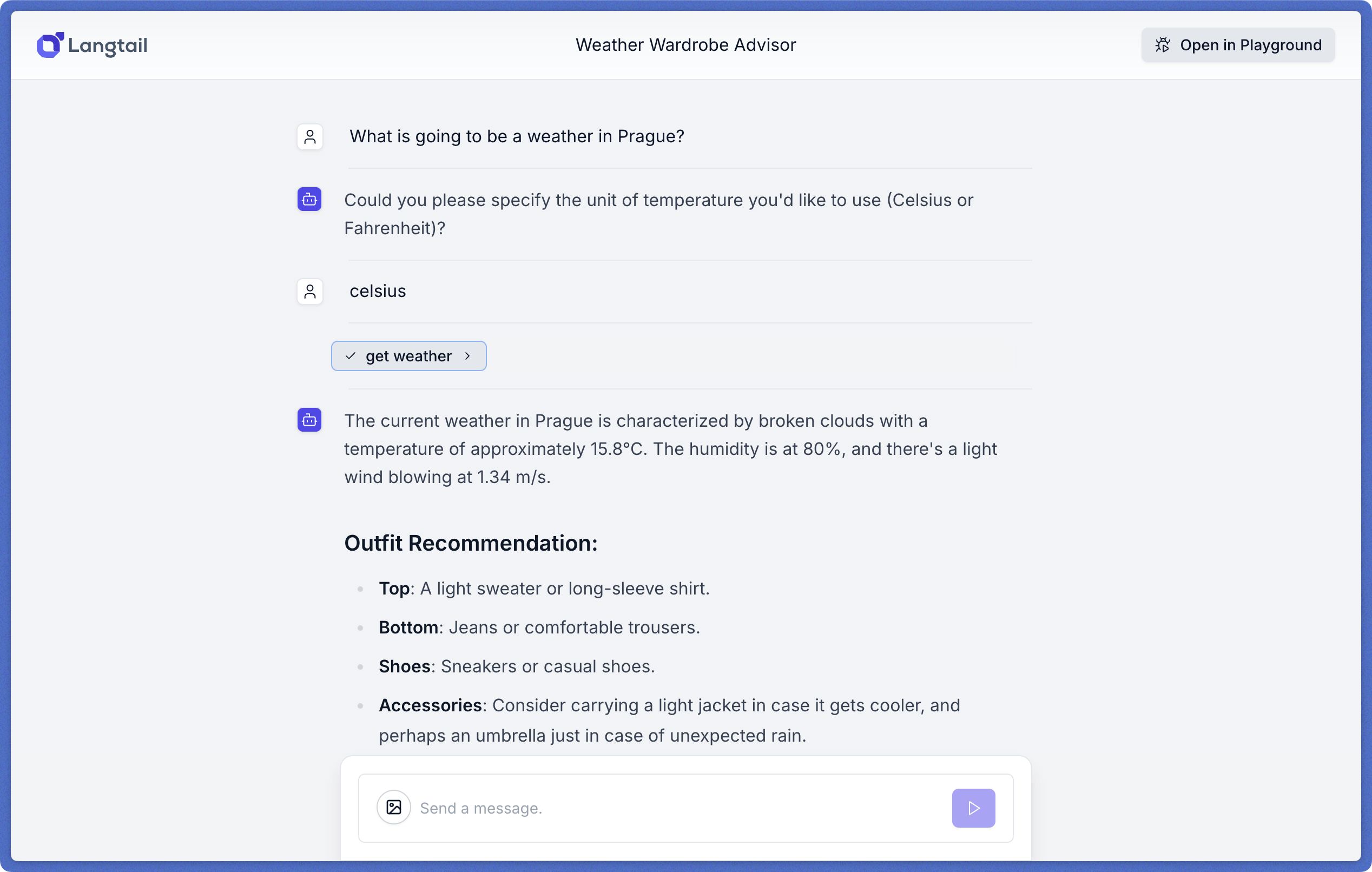

Honestly, the best way to explain it is to show it:

Notice how fast the feedback loop is between editing your prompt and seeing if the test cases pass. Langtail keeps you in flow and focused on optimizing your prompt for your use case.

What we're releasing today

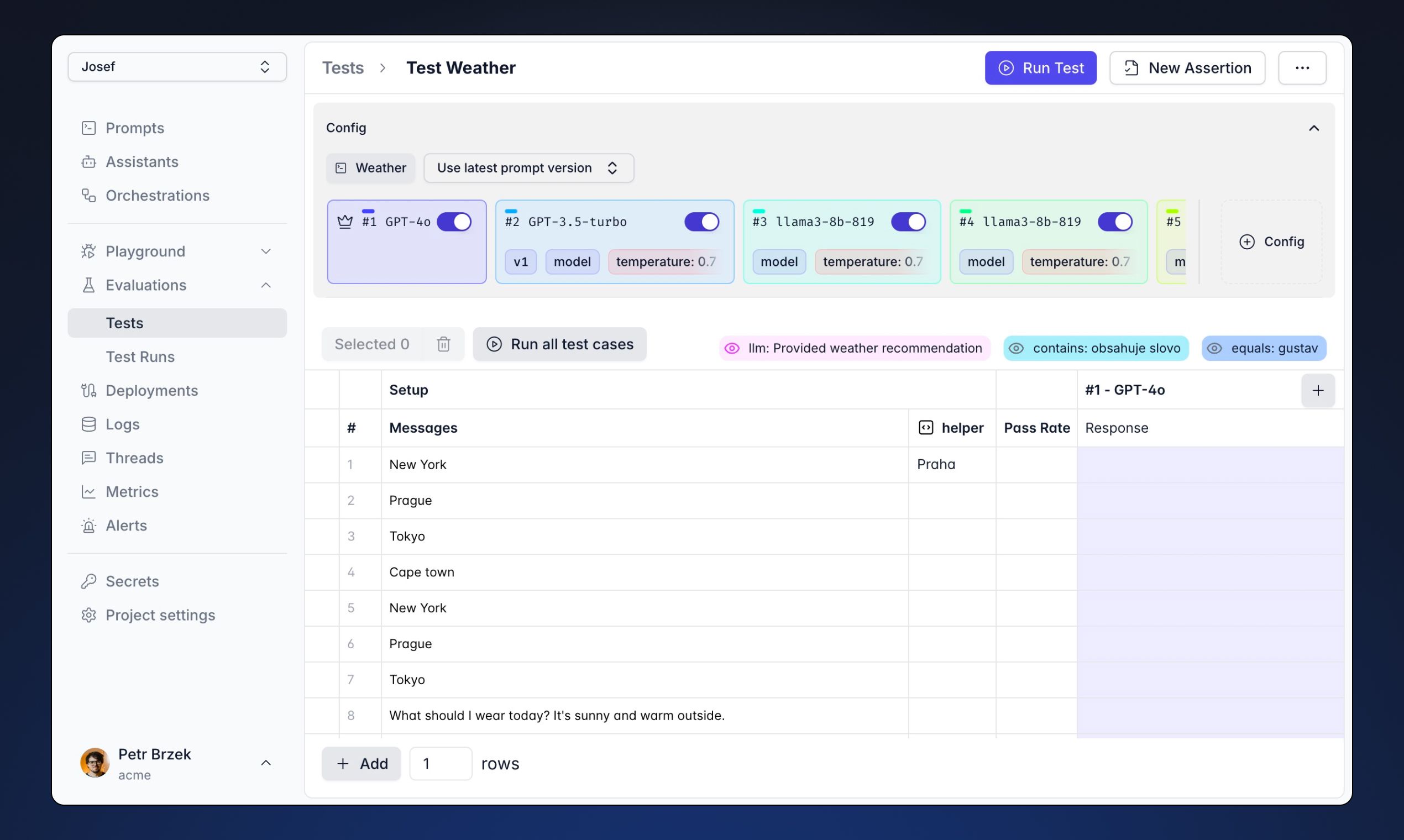

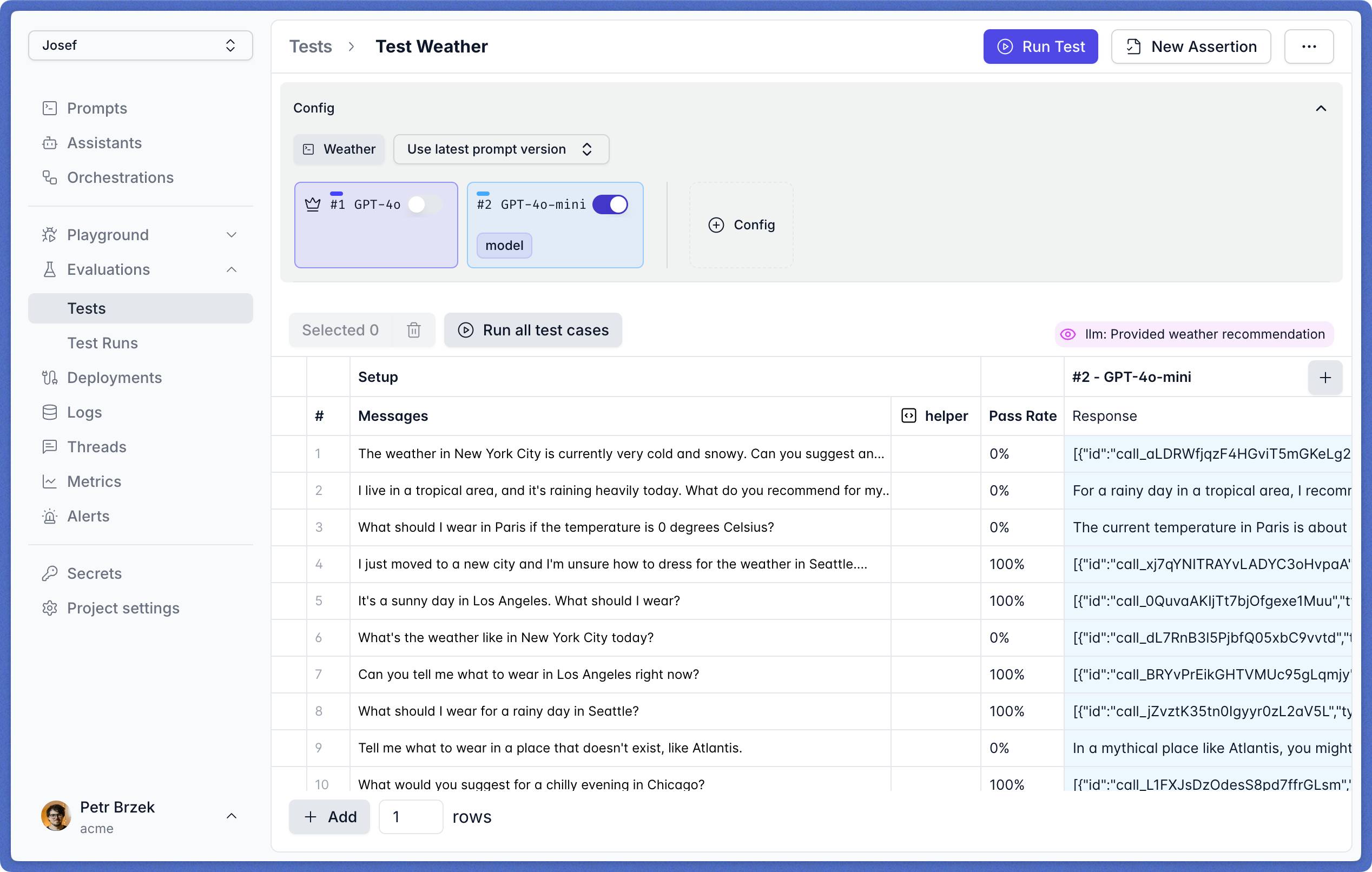

Brand new testing interface

At the core of Langtail 1.0 is our new spreadsheet-like interface for testing LLM applications. Our goal was to make it feel natural to anyone used to working in Excel or Google Sheets to build up a set of test cases.

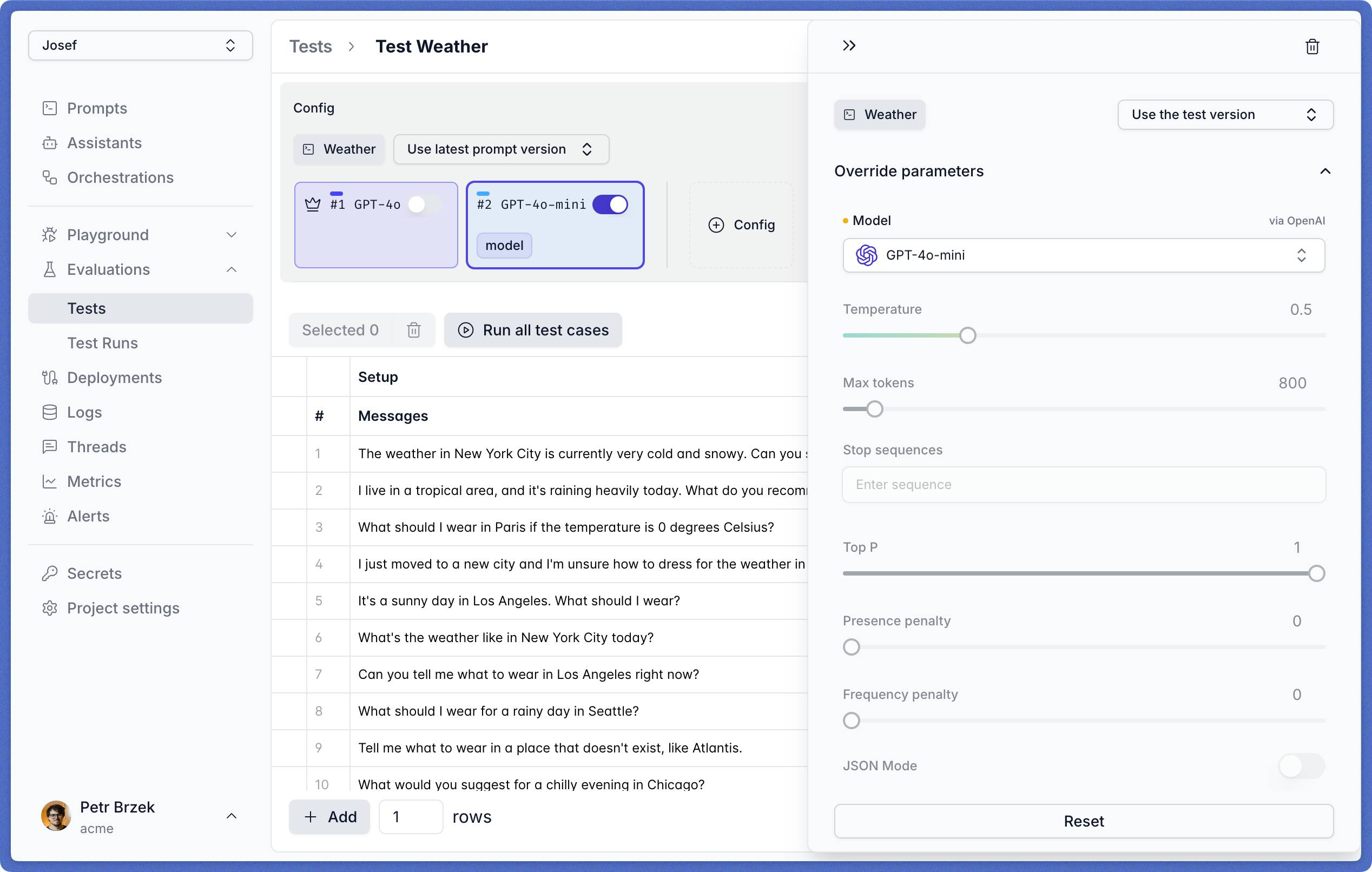

Test Configurations

Today we're also launching test configurations. Let's say that a new frontier model gets released and you're wondering if it'll benefit your app. It's as simple as a few clicks to create a new test config with that model and see the side-by-side comparison.

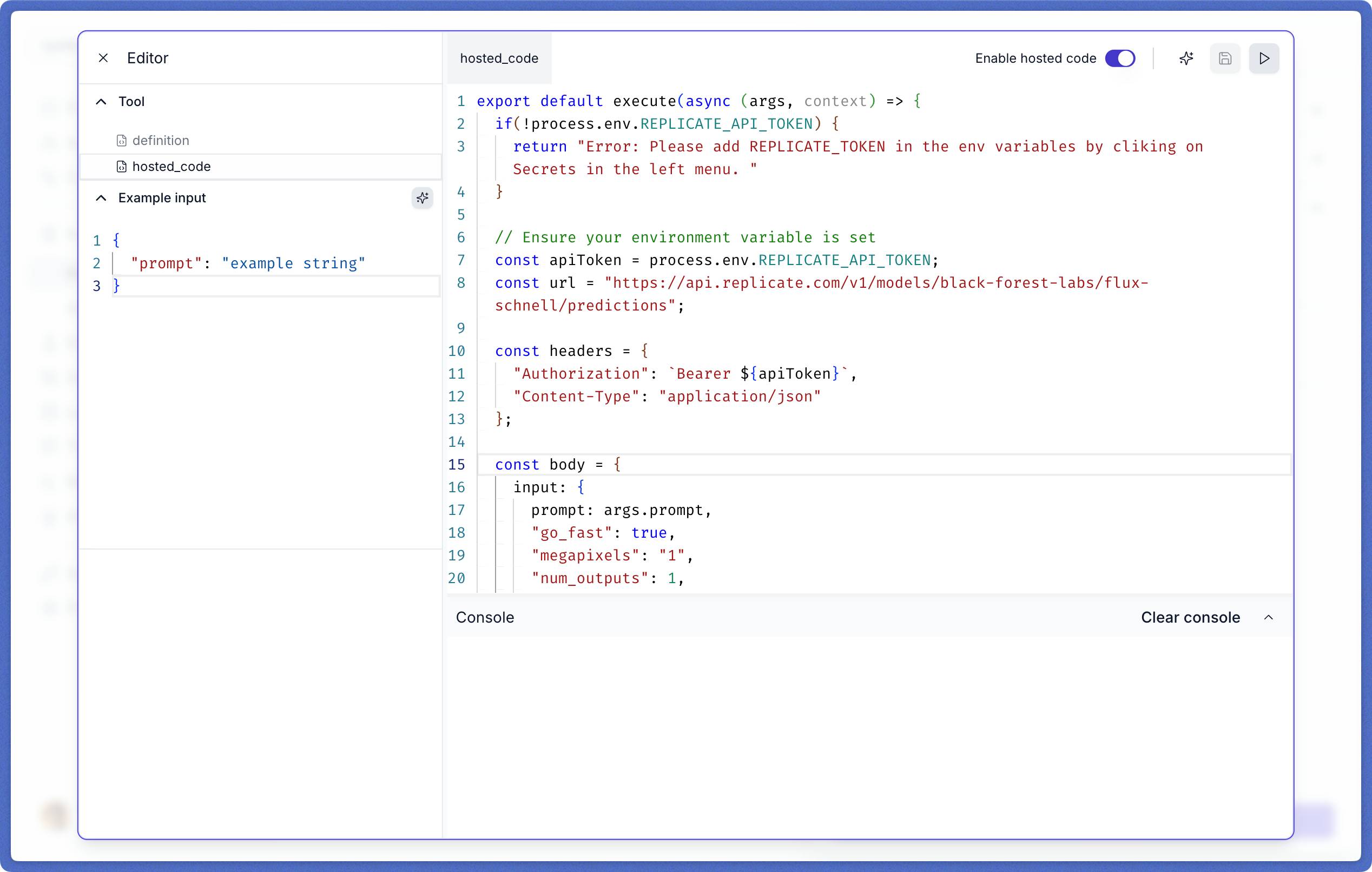

Tools with Hosted Code

Your prompts can now call tools and have the code run directly within Langtail. It makes prototyping and testing much easier since you no longer have to mock the response. Plus, with our secure sandboxed environment powered by QuickJS, you can safely and quickly execute JavaScript code.

Assistants: Memory Management for Prompts

Langtail 1.0 introduces Assistants—stateful entities that automatically manage memory and conversation history, so you don't have to. This means less code to write and maintain, and more focus on building. Assistants can be used across models, in tests, deployed as APIs, or integrated with tools.

And just a few more features

-

AI Firewall: Protect your AI apps from prompt injection attacks and other security vulnerabilities with our built-in AI Firewall.

-

Secure code execution: We've partnered with E2B to allow for the execution of LLM-generated code in a secure sandboxed container.

-

Langtail Templates: To help you get started, we added a gallery of templates that show many different ways that Langtail could help your team.

-

Google Gemini support: We've added support for Gemini models, including the ability to attach videos to prompts.

-

Self-hosting: We've done a lot of work behind the scenes to allow for enterprises to self-host Langtail entirely on their infrastructure. Let us know if you're interested in this!

See you in the app

We're incredibly proud of Langtail 1.0, and we'd love for you to experience it for yourself. Whether you're an AI engineer, a product team pushing the boundaries of LLM apps, or an enterprise seeking more control and security, Langtail 1.0 has the tools you need.

We can't wait to see what you'll build.

Related Articles

What Can LLM APIs Be Used For? A Complete Guide with Examples

Discover all practical uses of LLM APIs, from content creation to automation. Learn how to implement LLM APIs in your projects with real examples and step-by-step guides.

Understanding LLM Chat Streaming: Building Real-Time AI Conversations

Learn how to implement streaming responses with Large Language Models for a more engaging and responsive chat experience.

What is the Best Way to Think of Prompt Engineering

A comprehensive guide to understanding and mastering prompt engineering, exploring effective mental models and best practices for crafting AI prompts.