Monitor Prompt

Performance & Costs

Gain deep insights into LLM behavior, performance, and costs

with powerful monitoring and analytics tools.

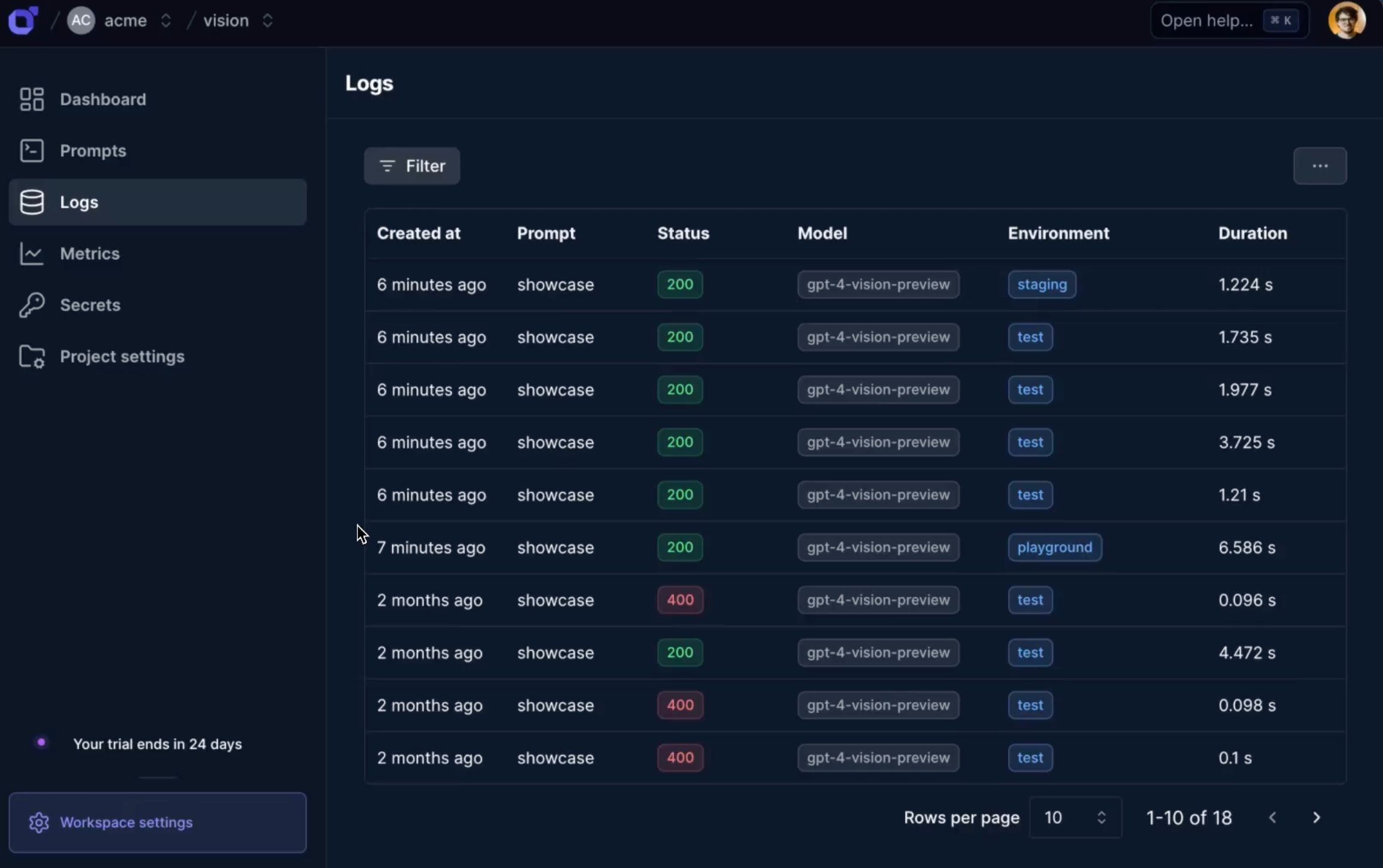

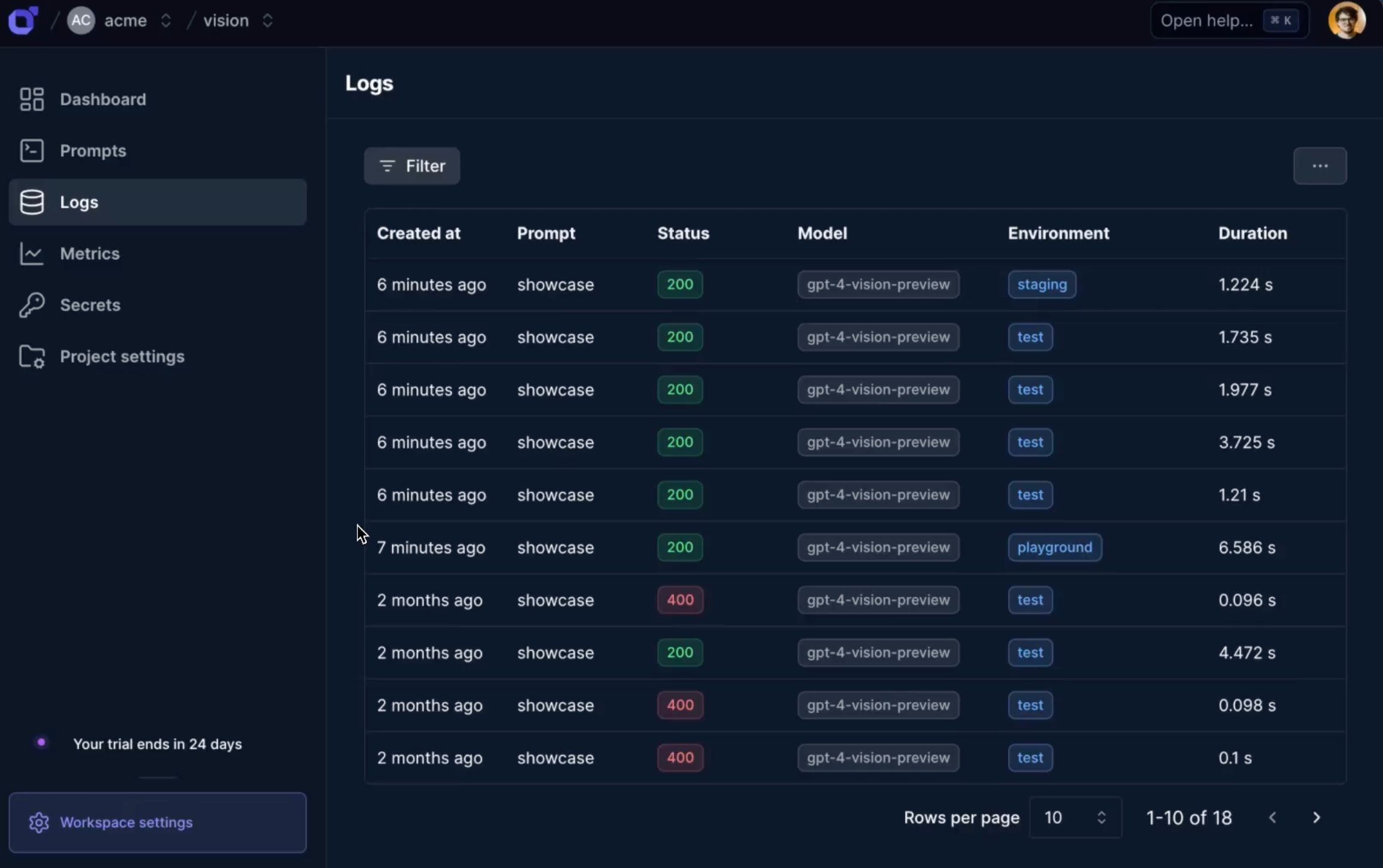

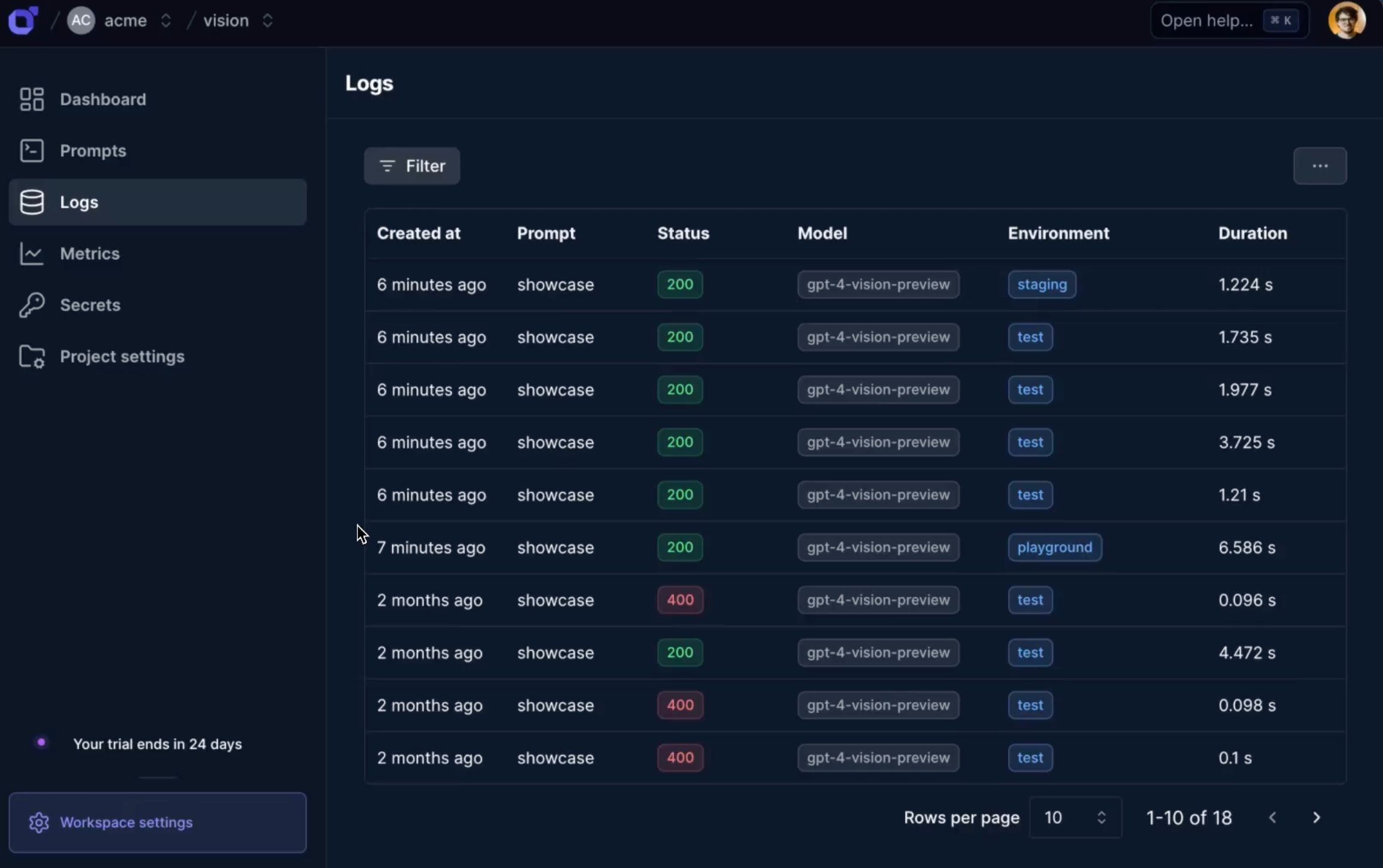

Detailed LLM Logging

Capture essential information for each LLM request

- Request Metadata

- Log cost, latency, user ID, prompt, environment, and model.

- Response Details

- Capture status, token usage, and request/response views.

- Custom Metadata

- Attach custom metadata to logs for filtering and analysis.

- Playground Integration

- Open logs in the playground for prompt debugging.

Powerful Log Filtering

Analyze your LLM logs with ease

- Filter by Properties

- Filter logs by cost, latency, environment, and more.

- Custom Metadata Filters

- Use custom metadata to create targeted filters.

- Save Filter Sets (soon)

- Save common filter combinations for quick access.

- Export Filtered Logs

- Export filtered logs for further analysis or integration.

Insightful Metrics

Understand your LLM usage and performance

- Cost Analysis

- Monitor and optimize your LLM spending.

- Usage Metrics

- Track request volumes, token usage, and average duration.

- Performance Benchmarking (soon)

- Compare performance across prompts, models, and environments.

- Alerts and Notifications (soon)

- Set up custom alerts and notifications for key metrics and thresholds.

Engineering and AI teams Langtail

“Langtail simplifies the development and testing of Deepnote AI, enabling our team to focus on further integrating AI features into our product”

“This is already a killer tool for many use-cases we are already using it for. Super excited for the upcoming features and good luck with the launch and further development! 💜”

“Been using LangTail for a few months now, highly recommend. It has kept me sane. If you want your LLM apps to behave uncontrollably all the time, don't use LangTail. On the other hand, if you are serious about the product you are building, you know what to do :P Love the product and the team's hard work. Keep up the great work!”

“I have used Langtail for prompt refinement, and it was a real timesaver for me. Debugging and refining prompts is sometimes a tedious task, and Langtail makes it so much easier. Good work!”

“LLM products are creating a flurry of bad experiences in their rush to hit the market quickly. But Petr and his team have been demonstrating since day one just how serious they are about doing this job with outstanding designs. I've been following them for over a year now and I highly recommend them to everyone. I'm certain they're going to reach fantastic places.”

“Been using Langtail for a while, and it has made working with our clients a breeze”

“Unpredictable behavior of LLMs, team collaboration on prompts and robust evaluation were the biggest pains for me when I was building my app. But now it's solved thanks to LangTail. It's a great product.”

- Detailed Logging

- Capture comprehensive logs for each LLM request, including cost, latency, and more.

- Request Metadata

- View detailed information about each request, such as the prompt used, environment, and model.

- Response Analysis

- Inspect the status, token usage, and content of each LLM response.

- Playground Integration

- Easily open logs in the playground for prompt debugging and optimization.

- Flexible Filtering

- Filter logs by any property, including custom metadata, for targeted analysis.

- Custom Metadata

- Attach custom metadata to logs for enhanced categorization and filtering.

- Cost Monitoring

- Track the cost of LLM usage over time to optimize resource allocation.

- Performance Metrics

- Gain insights into request volume, token usage, and average duration per request.

- Future Tracing

- Coming soon: Trace related logs together for end-to-end visibility.

Frequently asked questions

Ready to monitor LLM performance?

Start tracking your LLM usage, costs, and

performance for free today.