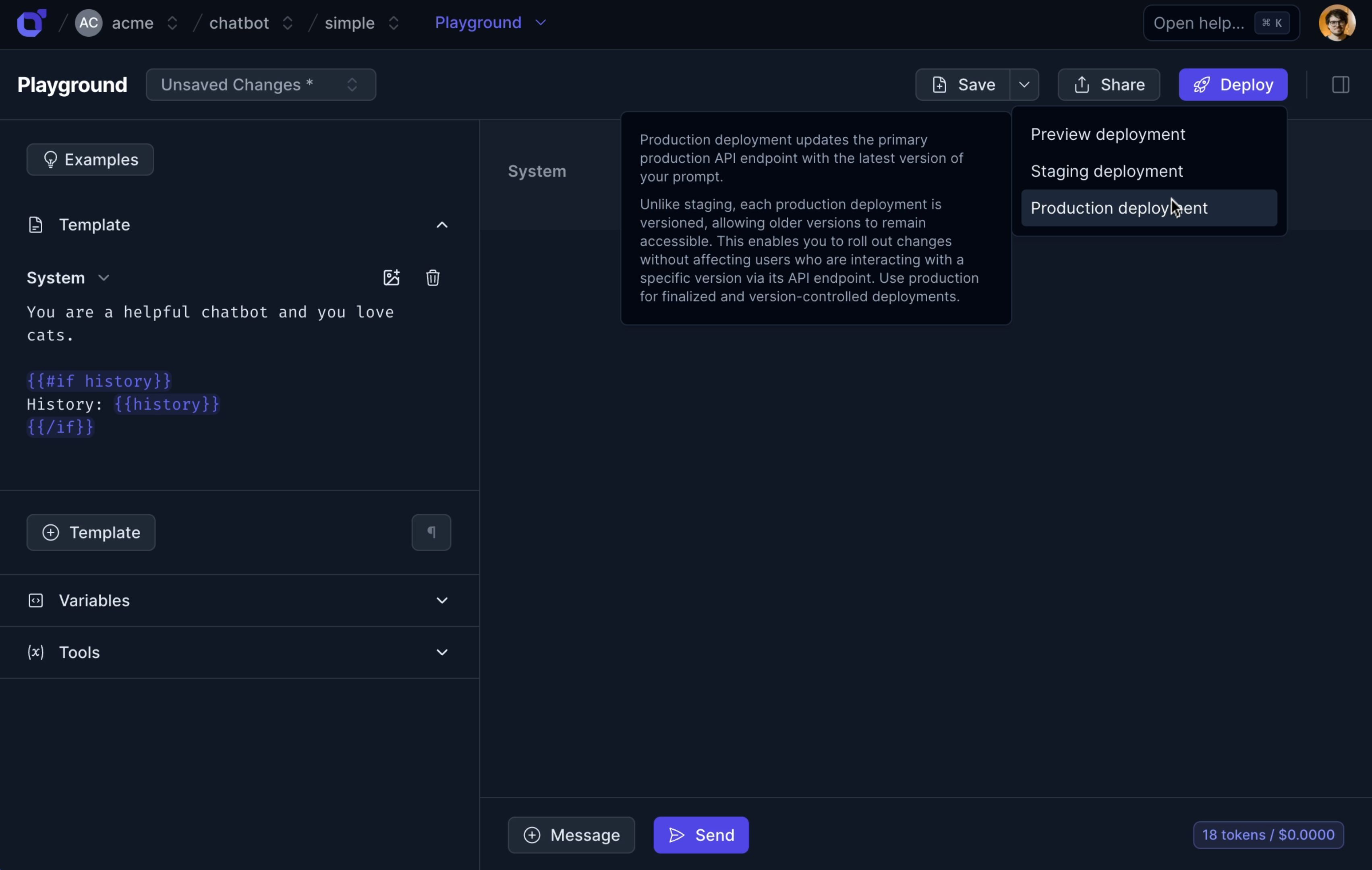

Deploy Prompts

as API Endpoints

Transform your prompts into versioned API endpoints,

and manage them across production, staging, and preview environments.

Prompt Deployment Made Easy

Deploy prompts as API endpoints

- Versioned API Endpoints

- Deploy prompts as versioned API endpoints for better control and stability.

- Proxy-based Deployment

- Langtail acts as a proxy, handling the communication between your app and the LLM provider.

- Seamless Integration

- Easily integrate deployed prompts into your applications using the provided API endpoints.

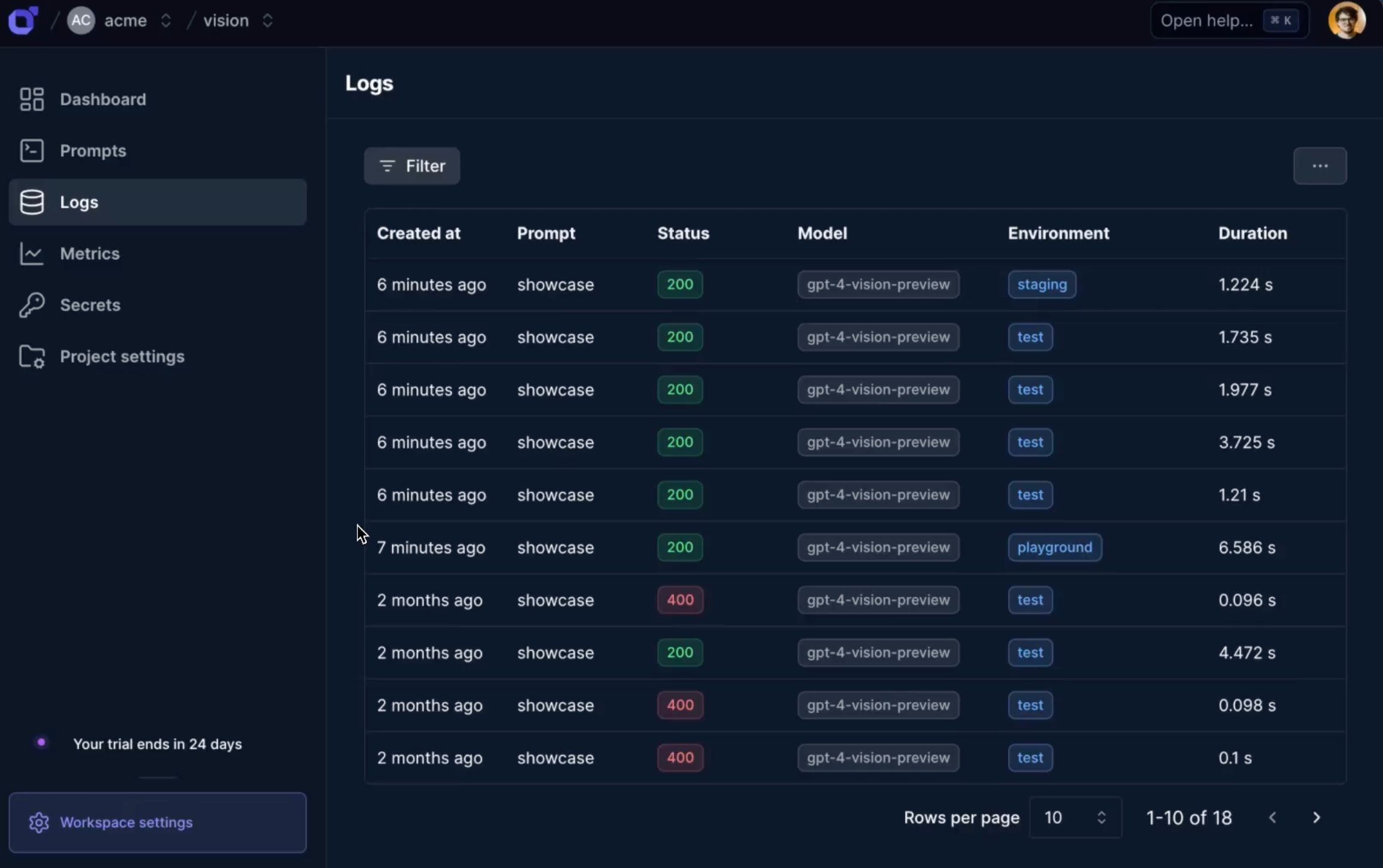

Comprehensive Logging

Track prompt usage across all environments

- Detailed Logs

- Access detailed logs for each deployed prompt and environment.

- Playground Integration

- Open deployed prompt versions directly in the Playground for debugging.

- Usage Insights

- Gain valuable insights into prompt usage and performance.

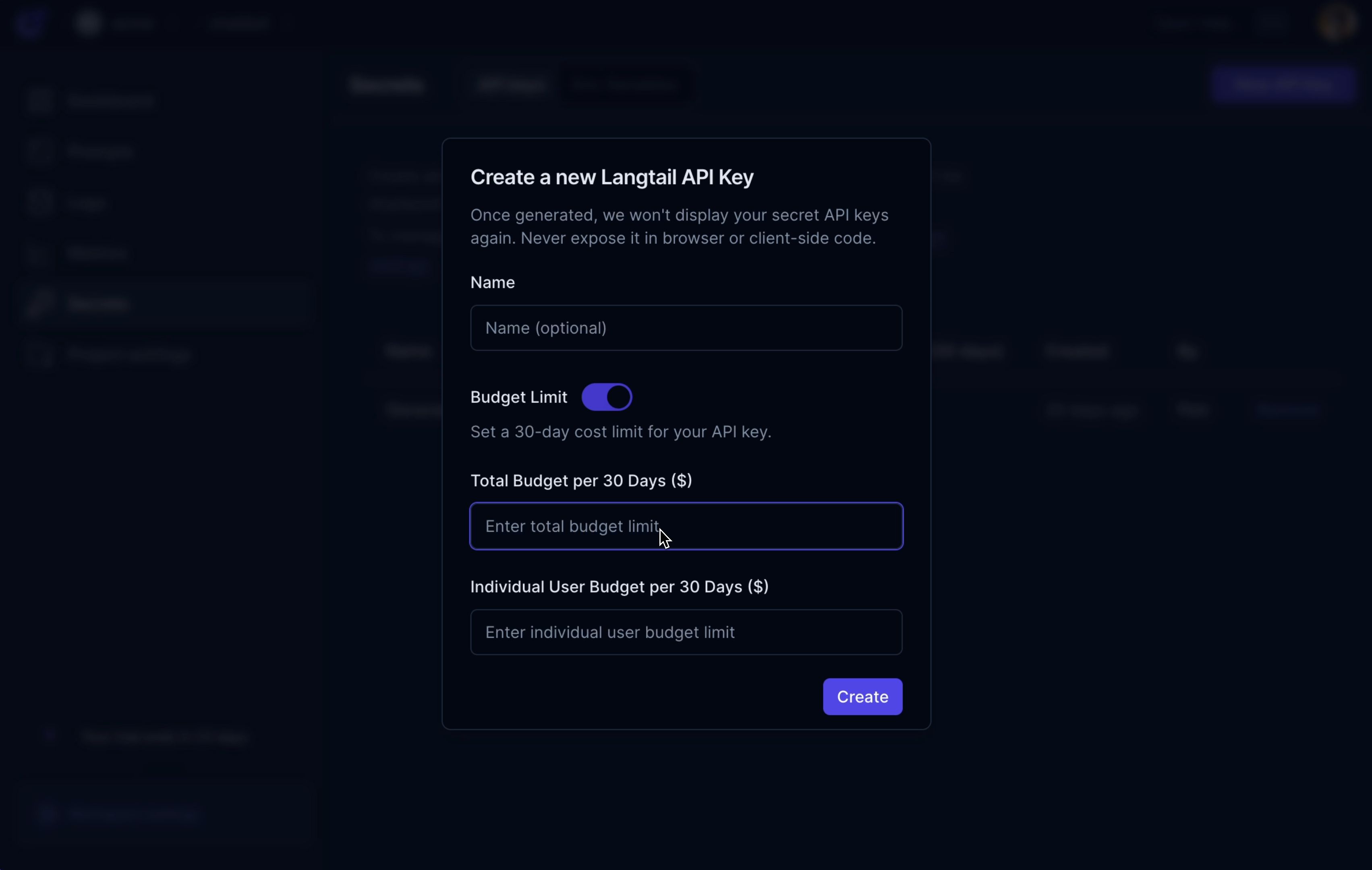

Advanced Configuration

Refine deployed prompts for optimal performance

- Rate Limiting

- Set rate limits for your deployed prompts based on budget constraints.

- Live Testing (Coming Soon)

- Perform live tests on your deployed prompts to ensure quality and reliability.

- A/B Testing & Fallbacks (Coming Soon)

- Conduct A/B tests and set up fallback prompts for uninterrupted service.

Engineering and AI teams Langtail

“Langtail simplifies the development and testing of Deepnote AI, enabling our team to focus on further integrating AI features into our product”

“This is already a killer tool for many use-cases we are already using it for. Super excited for the upcoming features and good luck with the launch and further development! 💜”

“Been using LangTail for a few months now, highly recommend. It has kept me sane. If you want your LLM apps to behave uncontrollably all the time, don't use LangTail. On the other hand, if you are serious about the product you are building, you know what to do :P Love the product and the team's hard work. Keep up the great work!”

“I have used Langtail for prompt refinement, and it was a real timesaver for me. Debugging and refining prompts is sometimes a tedious task, and Langtail makes it so much easier. Good work!”

“LLM products are creating a flurry of bad experiences in their rush to hit the market quickly. But Petr and his team have been demonstrating since day one just how serious they are about doing this job with outstanding designs. I've been following them for over a year now and I highly recommend them to everyone. I'm certain they're going to reach fantastic places.”

“Been using Langtail for a while, and it has made working with our clients a breeze”

“Unpredictable behavior of LLMs, team collaboration on prompts and robust evaluation were the biggest pains for me when I was building my app. But now it's solved thanks to LangTail. It's a great product.”

- Multi-environment Deployments

- Deploy prompts to production, staging, and preview environments.

- Versioned API Endpoints

- Access specific versions of your deployed prompts via API.

- Proxy-based Architecture

- Langtail handles communication between your app and the LLM provider.

- Detailed Logging

- Track prompt usage and performance across all environments.

- Playground Integration

- Open deployed prompt versions in the Playground for debugging.

- Rate Limiting

- Set rate limits for deployed prompts based on budget constraints.

- Live Testing (Coming Soon)

- Perform live tests on deployed prompts to ensure quality.

- A/B Testing (Coming Soon)

- Conduct A/B tests to optimize prompt performance.

- Fallback Prompts (Coming Soon)

- Set up fallback prompts for uninterrupted service.

Frequently asked questions

Ready to monitor LLM performance?

Start tracking your LLM usage, costs, and

performance for free today.